AI in Diagnostics: Addressing Biases and Disparities for Equitable Healthcare

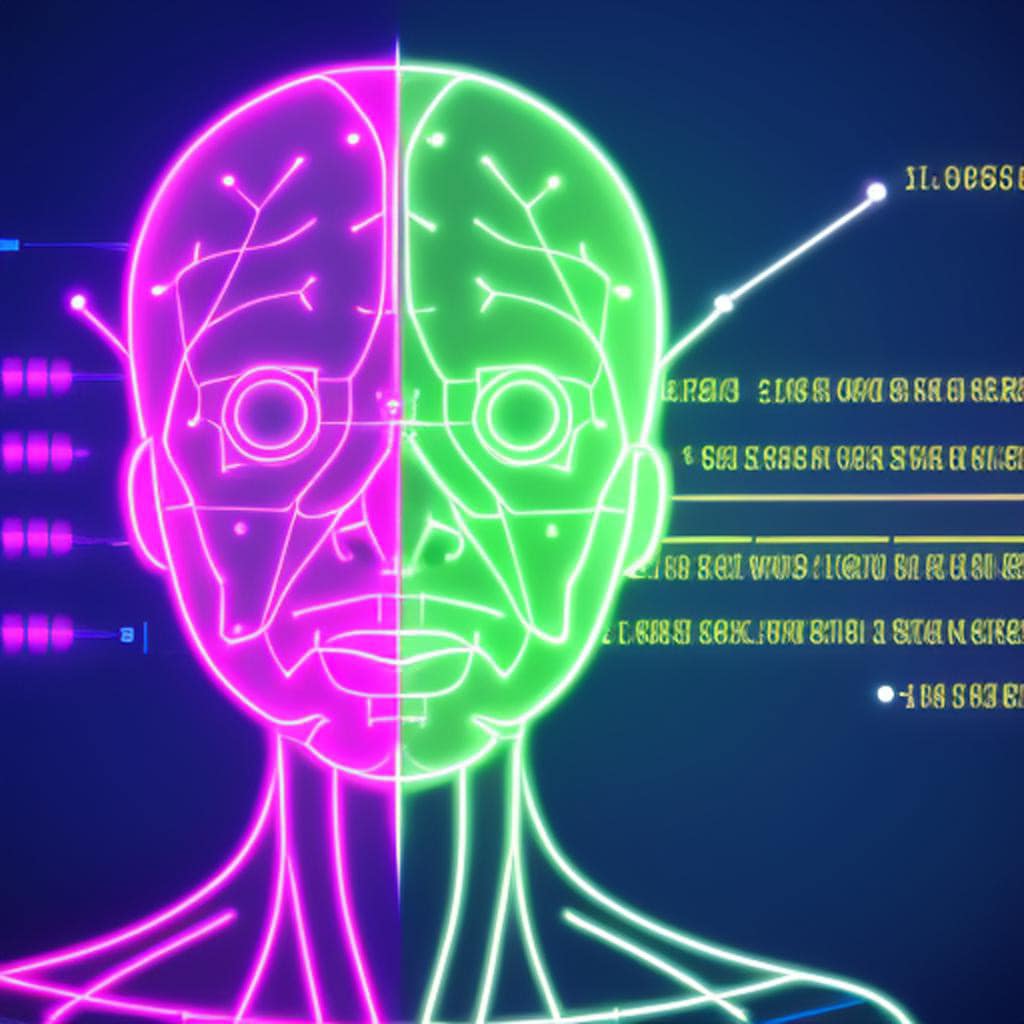

The integration of artificial intelligence (AI) in diagnostics holds great promise for advancing healthcare outcomes. However, it is imperative to address biases and disparities to ensure that AI-based diagnostics promote equitable healthcare for all individuals, regardless of their demographic characteristics. By proactively addressing these concerns, AI can contribute to reducing healthcare disparities and improving patient care on a broader scale.

One critical aspect to consider is algorithmic bias. AI algorithms are trained on historical data, which may reflect existing biases and disparities in healthcare. This can result in AI systems producing diagnostic outcomes that are inaccurate or biased for certain populations, leading to unequal access to quality care. Efforts should focus on developing inclusive and diverse training datasets that encompass a wide range of demographic and clinical factors, mitigating biases and promoting fair and accurate diagnostic outcomes for all patients.

To achieve equitable healthcare with AI, disparities in access to healthcare resources and technologies must be addressed. This includes ensuring that underserved communities, including those in rural or low-income areas, have equal access to AI-based diagnostic tools. Collaborative initiatives involving policymakers, healthcare organizations, and technology developers can help bridge the digital divide and ensure the widespread availability of AI technologies in diverse healthcare settings.

Another crucial consideration is the cultural competence of AI algorithms. Diagnostic accuracy can vary across different populations due to variations in disease prevalence, clinical presentation, and genetic factors. It is essential to develop AI systems that account for these differences and adapt to diverse patient populations. Ongoing research and validation studies should focus on evaluating AI algorithms’ performance across diverse populations to ensure diagnostic accuracy and effectiveness for all patients.

Transparency and explainability of AI algorithms are fundamental to address biases and disparities. Healthcare professionals and patients must have a clear understanding of how AI arrives at its diagnostic decisions. Explainable AI techniques that provide transparent insights into the decision-making process of AI algorithms can help build trust and confidence in AI-based diagnostics. Open dialogue and communication between AI developers and healthcare professionals can foster a shared understanding and address any concerns related to biases and disparities.

Ethical guidelines and regulatory frameworks should be established to promote fairness and accountability in AI diagnostics. These guidelines should address algorithmic biases, patient privacy, informed consent, and the responsible use of AI technology. Collaboration between policymakers, regulatory bodies, and AI experts is essential to develop comprehensive guidelines that promote equitable and unbiased AI-based diagnostic practices.

In conclusion, AI in diagnostics has the potential to improve healthcare outcomes and reduce disparities. By addressing biases, ensuring access to AI technologies, promoting cultural competence, and establishing transparent and ethical guidelines, AI can contribute to more equitable healthcare. Proactive efforts to address biases and disparities will help harness the transformative power of AI in diagnostics, ensuring that all individuals receive accurate, fair, and timely diagnoses, regardless of their background or circumstances.

Source OpenAI’s GPT language models, Fleeky, MIB, & Picsart

Thank you for questions, shares and comments!

Share your thoughts or questions in the comments below!